Harry Eggo

Principal Solutions Engineer

Updated February 19, 2026

3 min

Human‑in‑the‑Loop for LLM Workflows: Live Demo with Apryse WebViewer

Harry Eggo

Principal Solutions Engineer

Summary: Automating AI on documents doesn’t remove human review, it focuses it. To keep momentum, you need extraction that preserves context and an interface where reviewers accept, reject, and comment. Here is the technical breakdown of how we built a Human-in-the-Loop (HITL) pipeline using Apryse WebViewer and LLMs.

Introduction

When AI automated workflow encounters a document, things stall. Documents are downloaded to the local desktop environment, and reviewers lose context and collaboration can become fragmented.

Human review is still essential to avoid hallucinations but to be effective within the workflow and to provide a high-quality user experience, it needs to be in document to be reliable and accountable.

Imaginary Scenario: ESG Analyst-in-the-Loop

To give a feel for what’s possible, we set up an exercise with an imaginary human‑in‑the‑loop case embedded directly in the document workflow.

Take the case of an analyst classifying ESG data. ESG disclosures are scattered across narrative sections and tables, and labels depend on nuanced context, so analysts need to see and tag the actual document, not detached text.

Classification tools are powerful and can save a lot of time, but when they sit outside the document application, people copy/paste into side tools, approvals happen out‑of‑band, and the workflow stalls. Keeping review inside the app preserves context, speed, and auditability.

To solve this, we built a workflow that converts PDFs into structured intelligence, lets any LLM tool propose classifications, and brings review into WebViewer as a first‑class, in‑browser application so decisions happen in context and flow automatically into downstream systems.

What We Built

1. Layout-Aware Data Extraction

Using Apryse Smart Data Extraction, we converted the PDF into a structured JSON format. Document Structure Recognition retains headings, paragraphs, coordinates, and styles. This layout-aware signal is critical because LLMs perform better when given contextually coherent chunks and layout aware signals such as headings, paragraphs, and tables instead of unstructured text.

2. LLM-Based Classification (FinBERT-ESG)

With structured text in hand, we passed the data to an LLM. For our demo, we used a lightweight FinBERT variant (available via Hugging Face) with nine ESG (Environmental, Social, and Governance) classes to tag relevant spans.

While we used a local model for transparency and control, this pipeline is model agnostic. You can easily swap this for any LLM, including external APIs.

ESG classification powered by the FinBERT‑ESG (9 categories) model is available on Hugging Face: yiyanghkust/finbert‑esg‑9‑categories. Underlying FinBERT method is described in Huang, Wang & Yang, Contemporary Accounting Research (DOI: 10.1111/1911‑3846.12832)

Note: If you prefer calling an external model, our docs show how to use an external API using OpenAI’s Python API.

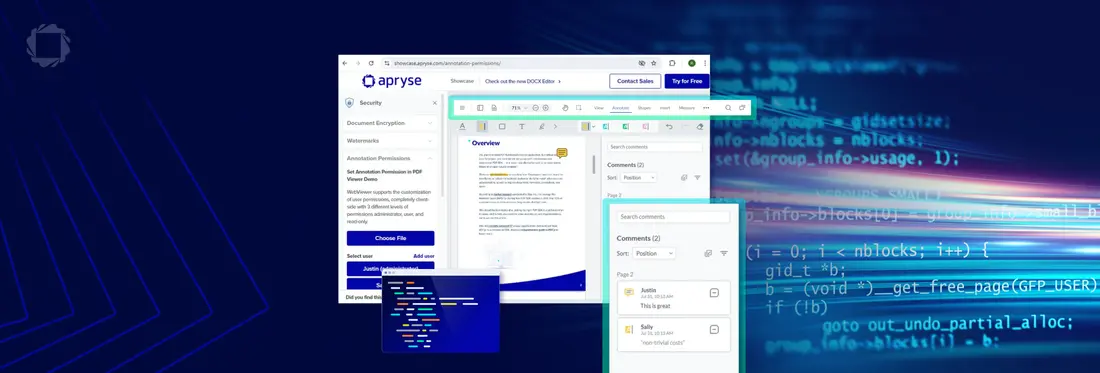

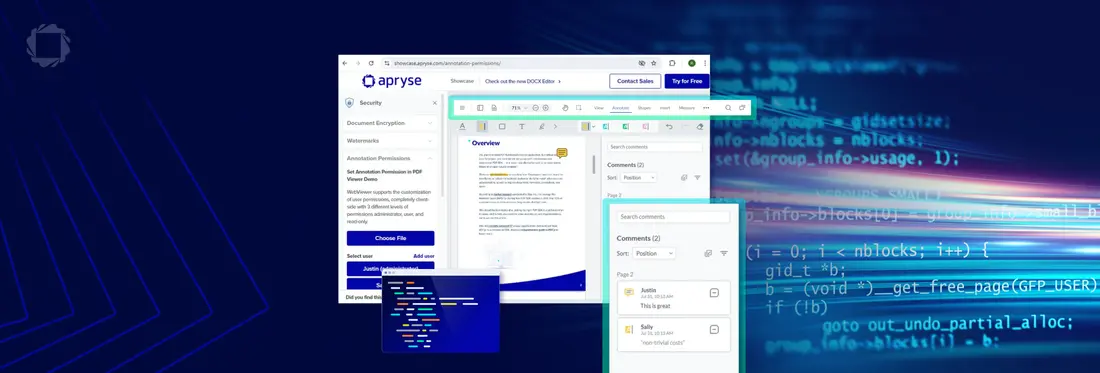

3. Visualizing AI Thoughts in the Browser

Once the model tags a specific span of text, we need to show the user why it made that choice. In WebViewer, we searched for matched text, resolved coordinates, and created color‑coded annotations (highlights + notes) to make classifications immediately reviewable. This was done fully in the web client for the demo, but this is also possible server-side.

4. Human‑in‑the‑Loop Review & Collaboration

Reviewers used status markers such as Accept and Reject, and real‑time comments. We used annotation event listeners to record each change’s data (for example, IDs, users, and timestamps) and produce logs that we can feed back as training data or use to advance workflow steps such as escalating a classification, inviting additional reviewers, and triggering downstream tasks.

Conclusion

Building a bridge between AI and human expertise doesn't have to be complicated. By combining structured data extraction with a robust web-based review UI, you can create workflows that are both automated and accountable.

Watch the webinar to see our SDK Lightning Demo on Human-in-the-Loop:

Ready to build your own? Get started at dev.apryse.com.