IDP: AI-Driven Table and Form Field Extraction

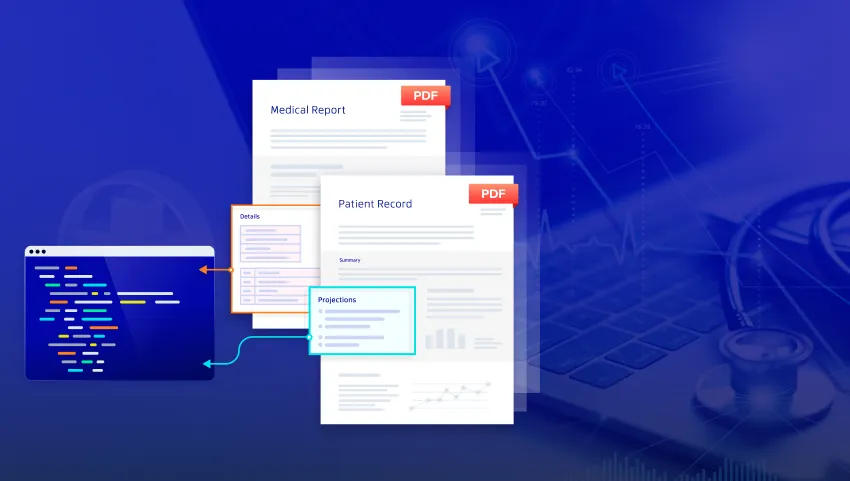

IDP takes the lead in simplifying the extraction of complex data structures from PDFs. Whether it's pulling specific information from financial reports or patient records, IDP's advanced algorithms ensure high accuracy and minimal setup.

IDP Use Cases

AI model training

Utilize IDP to feed high-quality, structured data extracted from diverse document types into AI models for training

Centralized Data Management

IDP helps you centralize data entry, allowing for streamlined queries and enhanced insight extraction.

Efficient Contract Review and Analysis

Leverage IDP for rapid extraction and analysis of key contract terms, aiding in faster decision-making and risk assessment.

OCR Use Cases

Digital Archive Searchability

Convert historical documents and archives into searchable formats, making it easy to access and analyze historical data.

Automated Form Processing

Transform paper-based forms into digital data, streamlining applications, registrations, and any form-intensive processes.

Real-time Document Verification

Ensure the accuracy and authenticity of your documents instantly with Apryse's real-time document verification capabilities. This feature is essential for industries requiring immediate validation of critical documents, such as finance, healthcare, and legal sectors.

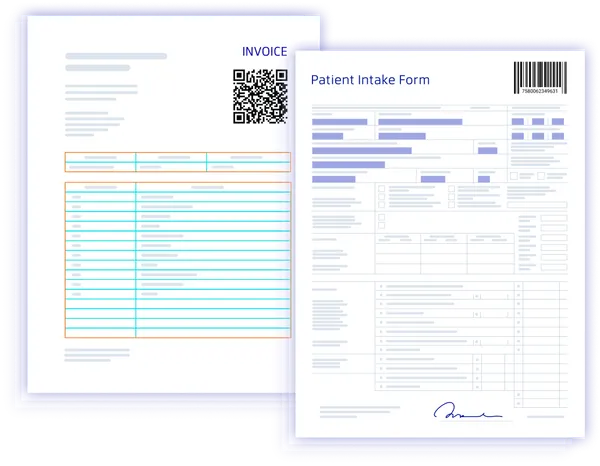

Barcode

Our barcode extraction technology brings seamless automation to document workflows, allowing accurate and efficient extraction of barcode data from a variety of documents and images. With support for over 100 barcode types, our solution is designed for versatility and reliability in high-demand environments.

Comprehensive Barcode Support

Effortlessly extract data from both 1D and 2D barcode formats, including popular types like QR codes, UPC, Data Matrix, and more. Whether dealing with product labels, shipping information, or inventory tags, our technology ensures that all barcode data is captured with precision.

Barcode Use Cases

Inventory and Asset Management

Accurately track and manage inventory by extracting barcode data from product labels and stock records. Our barcode extraction supports fast, bulk processing, making it ideal for warehouses and retail environments.

Logistics and Shipping Automation

Speed up shipping processes by extracting barcode information from labels, packing slips, and shipment documentation. Ensure smooth tracking and reduce errors across the supply chain with reliable, real-time barcode data capture.

Healthcare Recordkeeping

Seamlessly integrate barcode extraction into healthcare workflows, allowing for the quick identification and retrieval of patient records, medication information, and equipment inventory. Reduce manual errors and improve the accuracy of healthcare documentation

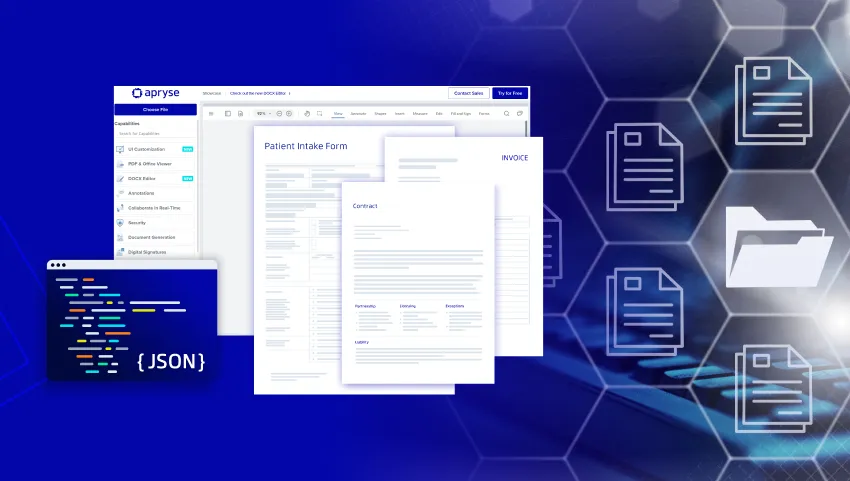

Template Library

Build and manage a library of custom templates using an intuitive UI. With the ability to create and fine-tune templates for specific document types, such as invoices, ACORD forms, and healthcare records, Template Extraction ensures precise data capture every time.

Template Extraction Use Cases

Insurance Claim Processing

Extract key fields from ACORD forms with speed and precision to improve claims management.

Invoice Management

Streamline accounts payable workflows by capturing essential invoice data for quick validation and processing.

Legal Document Analysis

Automate data extraction from standardized legal contracts, ensuring faster review and compliance checks.

RESOURCES

Innovative Technology. Proven Results

Extraction FAQ

Intelligent Document Processing (IDP) refers to the use of AI and machine learning technologies to automatically extract, understand, and process data from various document formats, transforming unstructured data into structured, actionable information.

While OCR (Optical Character Recognition) focuses on converting images of text into machine-encoded text, IDP encompasses a broader spectrum of capabilities, including the understanding of document structures, context recognition, and data extraction and processing.

Absolutely. Apryse's IDP solutions can be customized to meet the specific needs of various industries, with the ability to recognize and process industry-specific document formats and data types, ensuring high accuracy and relevancy in data extraction.

Yes, for details on extracting data from images and barcodes using Apryse's OCR and barcode, check the section on OCR and Intelligent Data Extraction in the official documentation.

By automating the extraction of structured data from diverse document types, IDP solutions not only streamline the data preparation phase but also significantly improve the quality of data fed into AI models, leading to more accurate and efficient learning outcomes. For a detailed exploration of these benefits and practical insights into leveraging IDP for AI development, read the full article here.

For strategies on dealing with documents of poor image quality, the OCR SDK feature highlights provide information on image processing techniques and the evolving capabilities for handwritten text recognition.

Apryse’s Barcode Extraction,supports over 100 barcode types, including 1D and 2D barcodes like QR codes, UPC, EAN, Code128, and DataMatrix. This makes it suitable for use across various industries.

Yes, Apryse’s Barcode Extraction, can accurately read damaged, skewed, or low-quality barcodes. This ensures reliable performance even in challenging conditions.

Yes, Apryse's Template Extraction includes an intuitive UI for creating and managing a library of custom templates. This allows you to fine-tune templates to suit the unique structure and data fields of your documents, ensuring precise data extraction every time.