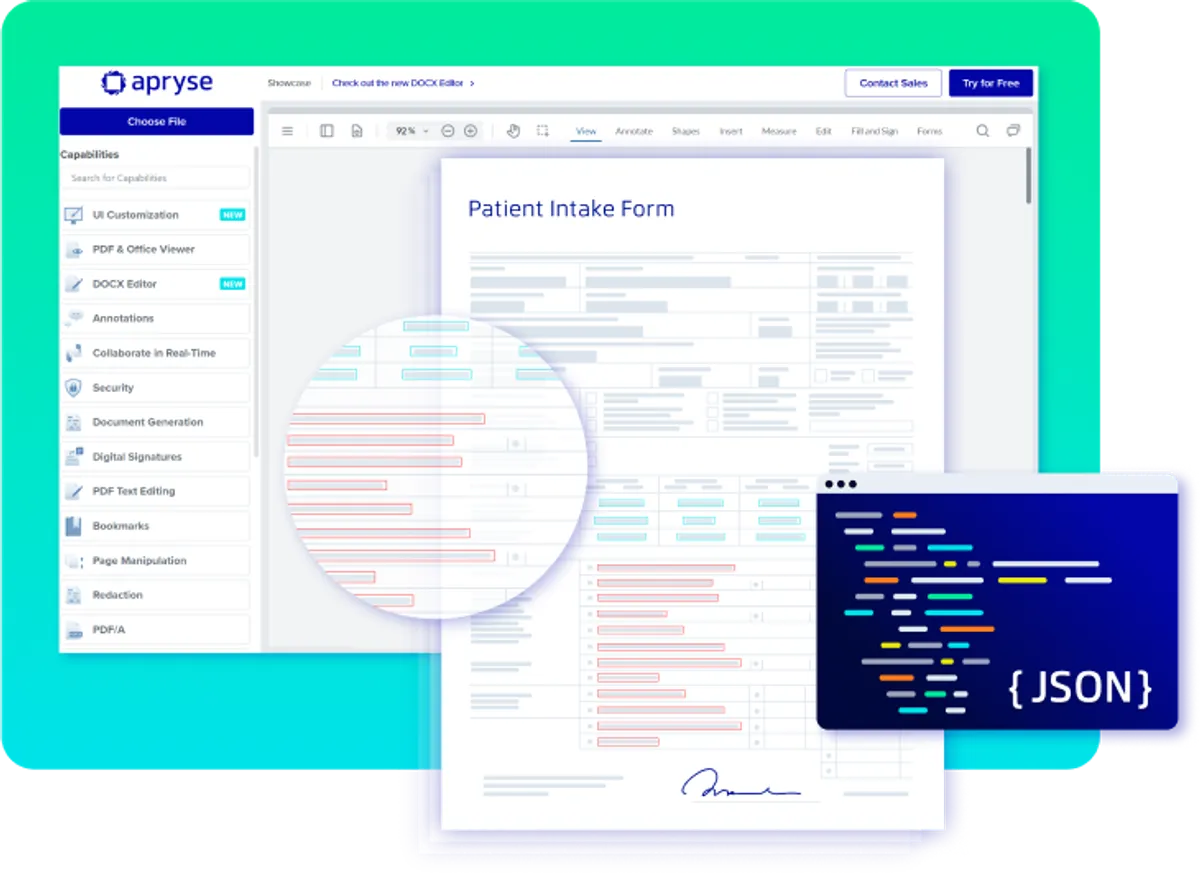

Smart Data Extraction (Previously Apryse IDP)

Same powerful SDK—new name. As “IDP” evolves into a broader category, we’re using clearer product names that reflect each layer. Smart Data Extraction handles layout, structure, labeling, and output—powering search, model training, and automation workflows.

Built for Document-Rich AI & Automation Workflows

Smart Data Extraction recognizes structure in complex documents—key-value pairs, tables, layout—and delivers machine-readable outputs like JSON, XML, and Excel.

From Chaos to Clarity: Structured Data Starts Here

Document Pre-Processing

Normalizes input files —deskewing, rotating, handling multi-column layouts— and prepares content for structured extraction—before the AI steps in.

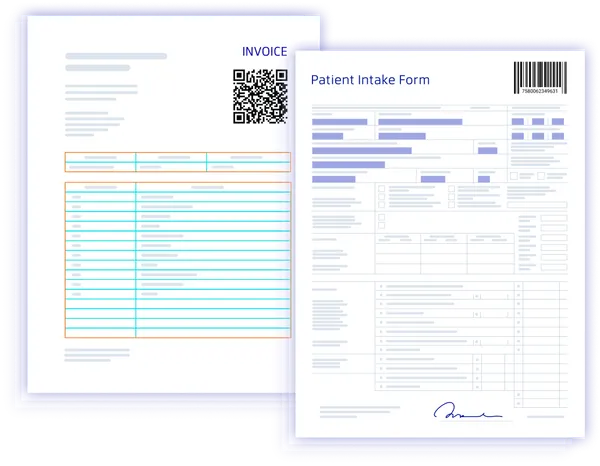

Key-Value Extraction

Identify fields like “Invoice #” or “Patient Name” from unstructured or scanned documents.

Table Recognition

Parse rows, merged cells, and numeric data from complex, layout-heavy tables.

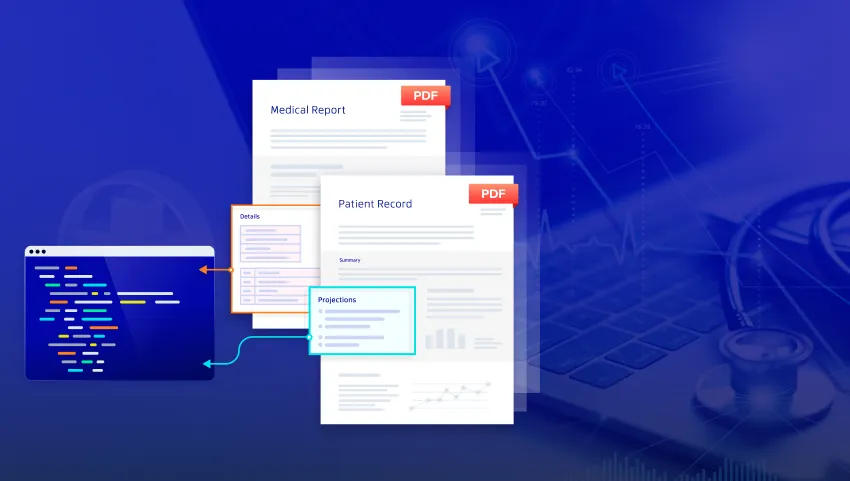

Full Document Element Extraction

Extract core components from PDFs—including text, images, fonts, layers, signatures, form fields, annotations, and metadata—so nothing gets lost in translation.

Document Structure & Form Field Detection

Understand document hierarchy (headings, paragraphs, lists) and spot visual markers like checkboxes and labels.

Output Formats

Supports JSON, XML, Excel, CSV—ideal for analytics, automation, or training pipelines.

Deploy Anywhere

SDK-based deployment. Works offline, on-prem, hybrid, or air-gapped. Compatible with Java, .NET, C++, Python

What Powers the Precision

Extracting structure from PDFs isn’t straightforward— text isn't always selectable, tables don’t behave like spreadsheets, and fields aren't tagged. Apryse handles this complexity, so you don’t have to. Under the hood, Apryse applies advanced computer vision to understand layout, semantics, and structure. We use real-time object detection (YOLO- You Only Look Once) to identify tables, fields, and sections, and BERT-based models to extract meaning from text. All models are trained exclusively on public and synthetic data—your documents are never part of the training set. These are not general-purpose models; they’re purpose-built for understanding and extracting structure from documents like forms, contracts, and reports.

Smart Data Extraction Use Cases

Data Preparation

Extract labeled JSON from PDFs, scans, or DOCX—no manual tagging or templates needed. Perfect for driving automation, AI-powered search, or real-time decision-making.

Contract Analysis

Parse clauses, obligations, and parties from complex legal documents for faster review and AI-driven insights.

Search and Document Understanding

Transform long documents into context-rich outputs with headings, sections, and entities for retrieval-augmented generation.

Barcode

Our barcode extraction technology brings seamless automation to document workflows, allowing accurate and efficient extraction of barcode data from a variety of documents and images. With support for over 100 barcode types, our solution is designed for versatility and reliability in high-demand environments.

Comprehensive Barcode Support

Effortlessly extract data from both 1D and 2D barcode formats, including popular types like QR codes, UPC, Data Matrix, and more. Whether dealing with product labels, shipping information, or inventory tags, our technology ensures that all barcode data is captured with precision.

Barcode Use Cases

Inventory and Asset Management

Accurately track and manage inventory by extracting barcode data from product labels and stock records. Our barcode extraction supports fast, bulk processing, making it ideal for warehouses and retail environments.

Logistics and Shipping Automation

Speed up shipping processes by extracting barcode information from labels, packing slips, and shipment documentation. Ensure smooth tracking and reduce errors across the supply chain with reliable, real-time barcode data capture.

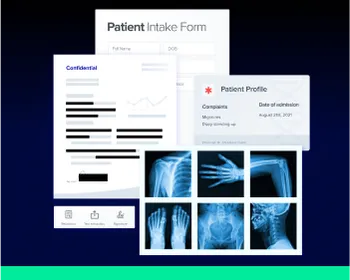

Healthcare Recordkeeping

Seamlessly integrate barcode extraction into healthcare workflows, allowing for the quick identification and retrieval of patient records, medication information, and equipment inventory. Reduce manual errors and improve the accuracy of healthcare documentation

Extraction FAQ

Smart Data Extraction refers to the use of AI and machine learning technologies to automatically extract, understand, and process data from various document formats, transforming unstructured data into structured, actionable information.

We support PDF (native and scanned), DOCX, TIFF, JPEG, and PNG as inputs. Outputs include JSON, XML, CSV/Excel.

Absolutely. Apryse's Smart Data Extraction solutions can be customized to meet the specific needs of various industries, with the ability to recognize and process industry-specific document formats and data types, ensuring high accuracy and relevancy in data extraction.

Yes, for details on extracting data from images and barcodes using Apryse's OCR and barcode, check the section on OCR and Intelligent Data Extraction in the official documentation.

By automating the extraction of structured data from diverse document types, Smart Data Extraction solution not only streamline the data preparation phase but also significantly improve the quality of data fed into AI models, leading to more accurate and efficient learning outcomes. For a detailed exploration of these benefits and practical insights into leveraging Smart Data Extraction for AI development, read the full article here.

Apryse’s Barcode Extraction,supports over 100 barcode types, including 1D and 2D barcodes like QR codes, UPC, EAN, Code128, and DataMatrix. This makes it suitable for use across various industries.

Yes, Apryse’s Barcode Extraction, can accurately read damaged, skewed, or low-quality barcodes. This ensures reliable performance even in challenging conditions.